Supervised Learning(Part-1)

Supervised Learning

Logistic Regression

It is used when the dependent variable(target) is categorical.

For example,

- To predict whether an email is spam (1) or (0)

- Whether the tumor is malignant (1) or not (0)

Simple Logistic Regression

Model

Output = 0 or 1

Hypothesis => Z = WX + B

hΘ(x) = sigmoid (Z)

Sigmoid Function

Types of Logistic Regression

1. Binary Logistic Regression: The categorical response has only two 2 possible outcomes. Example: Spam or Not

2. Multinomial Logistic Regression: Three or more categories without ordering. Example: Predicting which food is preferred more (Veg, Non-Veg, Vegan)

3. Ordinal Logistic Regression: Three or more categories with ordering. Example: Movie rating from 1 to 5

Decision Boundary

To predict which class a data belongs, a threshold can be set. Based upon this threshold, the obtained estimated probability is classified into classes.

Say, if predicted value ≥ 0.5, then classify email as spam else as not spam.

Decision boundary can be linear or non-linear. Polynomial order can be increased to get complex decision boundary.

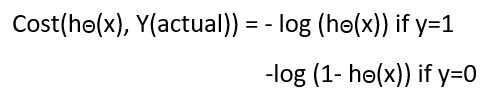

Cost Function

Why cost function which has been used for linear can not be used for logistic?

Why cost function which has been used for linear can not be used for logistic?Linear regression uses mean squared error as its cost function. If this is used for logistic regression, then it will be a non-convex function of parameters (theta). Gradient descent will converge into global minimum only if the function is convex.

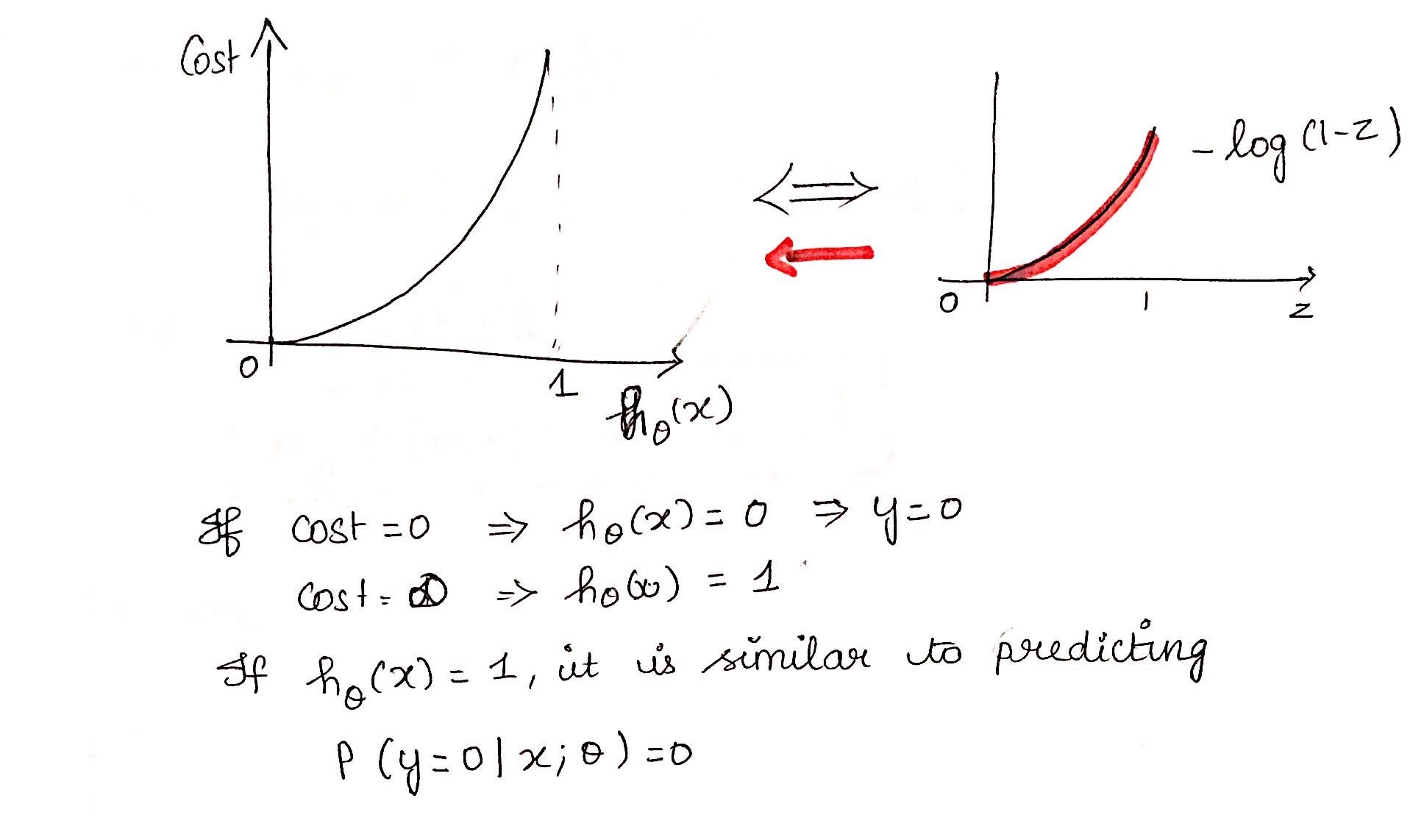

Cost function explanation

Simplified cost function

Why this cost function?

This negative function is because when we train, we need to maximize the probability by minimizing loss function. Decreasing the cost will increase the maximum likelihood assuming that samples are drawn from an identically independent distribution.

Comments

Post a Comment