Data Scrapping

Monday 31 August was a guest lecture at Sabudh Foundation where we were introduced to the concept of Data Scraping.

What is Data Scraping?

Data scraping, also known as web scraping, is the process of importing information from a website into a csv or any file on local. It’s one of the most efficient ways to get data from the web, and in some cases to channel that data to another website.

- Research for web content/business intelligence

- Pricing for travel booking sites or price comparison sites

- Finding sales leads or conducting market research by crawling public data sources (e.g. Yell and Twitter)

- Sending product data from an e-commerce site to another online vendor (e.g. Google Shopping)

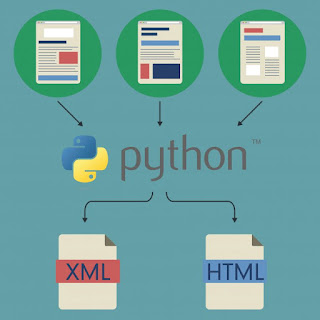

Scraping using Python

There are numerous number of packages available for web scraping in python but we only need a handful of them in order to scrape almost any site. Some of these libraries are are named below:

- Requests

- BeautifulSoup

- lxml

- Selenium

- Scrapy

Note: In this lecture we were provided with the idea of Beautiful Soup

The Request Module

The Requests Module is a simple yet powerful HTTP module, which means that one can use it to access web pages. It the library that tells how one can communicate with websites.

Its simplicity is definitely its greatest strength and one can easily use that without reading documentation.

For example, if we want to pull down the contents of a page, it can be done as:

page = requests.get('http://examplesite.com')

contents = page.content

The variable content will hold the raw html of the website given to the get method of request library.

Other than this the request module can access API’s, post to forms, and can do various other stuff.

Beautiful Soup

Beautiful Soup (BS4) is a parsing library that can use different parsers. A parser is simply a program that can extract data from HTML and XML documents.

One advantage of BS4 is its ability to automatically detect encodings, this allows it to gracefully handle HTML documents with special characters. In addition to it BS4 can help us to navigate a parsed document and find us what we need. This makes it quick and painless to build common applications.

For example, if we wanted to find all the links in the web page we fetched earlier (using requests), this can be done as

soup is the object of BeautifulSoup Class which takes in the html of the website and parser to go through the html.

Once the object is created we use find_all() method to get all the anchor tags ('a') which returns the list of all the

links present in the web page.

Methods From Beautiful Soup

text

The Beautiful Soup object has a text attribute that returns the plain text of a HTML. Given our simple soup of <p> Hello world </p> the text attribute returns Hello World.

find()

find() method is used get the first instance of any tag of html like anchor tag, paragraph tag etc., after getting the first instance of the particular tag one can get the text using text attribute of Beautiful Soup.

attrs

attrs is like the text in Beautiful Soup which helps us to get the values present in the attributes of various tags. For Example we can get the src attribute of the image tag, href value of the anchor tag.

Comments

Post a Comment